More than 1,000 British holidaymakers are trapped in the Alps after freak snowfalls severed road, rail and air links. As much as 18ft of snow has fallen in Austria the past few days with falling trees and rocks blocking many routes.

Some 1,000 British skiers are stuck in the Austrian town of Ischgl alone, with more trapped in the resorts of Galtur, St Anton and Arlberg - which have seen as much as 10ft of snow in the past 48 hours.

Yesterday authorities in the region raised the avalanche warning to stage three, or `considerable risk', and holidaymakers were advised to stay indoors.

Few ski lifts were operating and many pistes were closed down as darkness fell.

One of the few lifts still working shut down in the sub-zero temperatures, stranding 150 skiers on the slopes in the popular resort of Mayrhofen.

Two Austrian Army helicopters were scrambled to airlift a number of inexperienced skiers - including children - down the difficult slopes above the Ahornbahn lift.

High Alpine winds continue to create the potential for fearsome snowslides according to the local Avalanche Commission, which is monitoring the situation.

Some 76,000 households, hotels, pensions and guest houses have been hit by power cuts over the past few days as a result of the storms. `It is a winter that went from zero to 100', said Andreas Steibl, tourism director for the Paznaun-Ischgl resort, yesterday.

The main access road, along the Paznaun Valley from the town of Landeck to Ischgl, was open for a while on Saturday - allowing holidaymakers in and out of the resort. But since then the road has been closed because of the high risk of avalanches.

`Although the roads have been closed, the resort itself has been operating as normal with skiers and boarders experiencing amazingly good conditions,' Mr Steibl said.

Before Christmas the area enjoyed high temperatures and many in the Tyrol region now fear an economic wipeout.

Dutch tourist Romke Loopke and his family were buried in their car by an avalanche. He said; `It was scary as hell. One minute it was all white and then the next totally dark. `Luckily I got a window open and managed to dig my way out.'

John Thorpe, 33, on holiday from Glasgow with wife Gill and their two sons, told an Austrian radio station in Ischgl; `It's a bit like being trapped in paradise.

`We were due to head for Salzburg and home today but we can't get down the road. `The railway line is out and the road is blocked. But I don't think you will find many people complaining - it's beautiful and thrilling to see nature this powerful this close to.'

The region's mayor, Rainer Silberberger, said: `We are working to clear roads and secure the snow falls. I've never seen weather like it.'

One British ski rep said there was growing frustration among some holidaymakers who were supposed to be back at work yesterday and from those who were due to start their trips at the beginning of the week. He said: `While I think it is fair to say most people are happy to be trapped, there are those who urgently need to get home and those who want to get started on their holidays, and so a sense of frustration is mounting.'

And ski instructor Sarah Hannibal, who works in Ischgl, said: `The skiing conditions are fantastic, although obviously no one is going off-piste at the moment because of the amount of snow. `It has been very windy at the top of the mountain which means visibility can be affected by wind-blown snow - but the holidaymakers are having a wonderful time.

`Many ski instructors who live in other parts of the valley are the ones affected most by the conditions and they have had to make arrangements to stay with friends or at hotels in Ischgl. `That includes me as I live in Galtur and have been stranded in Ischgl but it's no hardship when the skiing is so good.'

SOURCE

Will Replicated Global Warming Science Make Mann Go Ape?

About 10 years ago, December 20, 2002 to be exact, we published a paper titled “Revised 21st century temperature projections” in the journal Climate Research. We concluded:

Temperature projections for the 21st century made in the Third Assessment Report (TAR) of the United Nations Intergovernmental Panel on Climate Change (IPCC) indicate a rise of 1.4 to 5.8°C for 1990–2100. However, several independent lines of evidence suggest that the projections at the upper end of this range are not well supported…. The constancy of these somewhat independent results encourages us to conclude that 21st century warming will be modest and near the low end of the IPCC TAR projections.

We examined several different avenues of determining the likely amount of global warming to come over the 21st century. One was an adjustment to climate models based on (then) new research appearing in the peer-reviewed journals that related to the strength of the carbon cycle feedbacks (less than previously determined), the warming effect of black carbon aerosols (greater than previously determined), and the magnitude of the climate sensitivity (lower than previous estimates). Another was an adjustment (downward) to the rate of the future build-up of atmospheric carbon dioxide that was guided by the character of the observed atmospheric CO2 increase (which had flattened out during the previous 25 years). And our third estimate of future warming was the most comprehensive, as it used the observed character of global temperature increase—an integrator of all processes acting upon it—to guide an adjustment to the temperature projections produced by a collection of climate models. All three avenues that we pursued led to somewhat similar estimates for the end-of- the-century temperature rise. Here is how we described our findings in paper’s Abstract:

Since the publication of the TAR, several findings have appeared in the scientific literature that challenge many of the assumptions that generated the TAR temperature range. Incorporating new findings on the radiative forcing of black carbon (BC) aerosols, the magnitude of the climate sensitivity, and the strength of the climate/carbon cycle feedbacks into a simple upwelling diffusion/energy balance model similar to the one that was used in the TAR, we find that the range of projected warming for the 1990–2100 period is reduced to 1.1–2.8°C. When we adjust the TAR emissions scenarios to include an atmospheric CO2 pathway that is based upon observed CO2 increases during the past 25 yr, we find a warming range of 1.5–2.6°C prior to the adjustments for the new findings. Factoring in these findings along with the adjusted CO2 pathway reduces the range to 1.0–1.6°C. And thirdly, a simple empirical adjustment to the average of a large family of models, based upon observed changes in temperature, yields a warming range of 1.3–3.0°C, with a central value of 1.9°C.

We thus concluded:

Our adjustments of the projected temperature trends for the 21st century all produce warming trends that cluster in the lower portion of the IPCC TAR range. Together, they result in a range of warming from 1990 to 2100 of 1.0 to 3.0°C, with a central value that averages 1.8°C across our analyses.

Little did we know at the time, but behind the scenes, our paper, the review process that resulted in its publication, the editor in charge of our submission, and the journal itself, were being derided by the sleazy crowd that revealed themselves in the notorious “Climategate” emails, first released in November, 2009. In fact, the publication of our paper was to serve as one of the central pillars that this goon squad used to attack on the integrity of the journal Climate Research and one of its editors, Chris de Freitas.

The initial complaint about our paper was raised back in 2003 shortly after its publication by Tom Wigley, of the US National Center for Atmospheric Research and University of Toronto’s L. D. Danny Harvey, who served as supposedly “anonymous” reviewers of the paper and who apparently had a less than favorable opinion about our work that they weren’t shy about spreading around. According to Australian climate scientist Barrie Pittock:

I heard second hand that Tom Wigley was very annoyed about a paper which gave very low projections of future warmings (I forget which paper, but it was in a recent issue [of Climate Research]) got through despite strong criticism from him as a reviewer.

So much for being anonymous.

The nature of Wigley and Harvey’s dissatisfaction was later made clear in a letter they sent to Chris de Freitas (the editor at Climate Research who oversaw our submission) and demanded to know the details of the review process that led to the publication of our paper over their recommendation for its rejection. Here is an excerpt from that letter:

Your decision that a paper judged totally unacceptable for publication should not require re-review is unprecedented in our experience. We therefore request that you forward to us copies of the authors responses to our criticisms, together with: (1) your reason for not sending these responses or the revised manuscript to us; (2) an explanation for your judgment that the revised paper should be published in the absence of our re-review; and (3) your reason for failing to follow accepted editorial procedures.

Wigley asked Harvey to distribute a copy of their letter of inquiry/complaint to a large number of individuals who were organizing some type of punitive action against Climate Research for publishing what they considered to be “bad” papers. Apparently, Dr. de Freitas responded to Wigley and Harvey’s demands with the following perfectly reasonable explanation:

The [Michaels et al. manuscript] was reviewed initially by five referees. … The other three referees, all reputable atmospheric scientists, agreed it should be published subject to minor revision. Even then I used a sixth person to help me decide. I took his advice and that of the three other referees and sent the [manuscript] back for revision. It was later accepted for publication. The refereeing process was more rigorous than usual.

This did little to appease to those wanting to discredit Climate Research (and prevent the publication of “skeptic” research) as evidenced by this email from Mike Mann to Tom Wigley and a long list of other influential climate scientists:

Dear Tom et al,

Thanks for comments–I see we’ve built up an impressive distribution list here!

…

Much like a server which has been compromised as a launching point for computer viruses, I fear that “Climate Research” has become a hopelessly compromised vehicle in the skeptics’ (can we find a better word?) disinformation campaign, and some of the discussion that I’ve seen (e.g. a potential threat of mass resignation among the legitimate members of the CR editorial board) seems, in my opinion, to have some potential merit.

This should be justified not on the basis of the publication of science we may not like of course, but based on the evidence (e.g. as provided by Tom and Danny Harvey and I’m sure there is much more) that a legitimate peer-review process has not been followed by at least one particular editor.

Mann went on to add “it was easy to make sure that the worst papers, perhaps including certain ones Tom refers to, didn’t see the light of the day at J. Climate.” This was because Mann was serving as an editor of the Journal of Climate and was indicating that he could control the content of accepted papers. But since Climate Research was beyond their direct control, it required a different route to content control. Thus pressure was brought to bear on the editors as well as on the publisher of the journal. And, they were willing to make things personal. For a more complete telling of the type and timeline of the pressure brought upon Chris de Freitas and Climate Research see this story put together from the Climategate emails by Anthony Watts over at Watts Up With That.

Now, let’s turn the wheels of time ahead 10 years, to January 10, 2012. Just published in the journal Geophysical Research Letters is a paper with this provocative title: “Improved constraints in 21st century warming derived using 160 years of temperature observations” by Nathan Gillett and colleagues from the Canadian Centre for Climate Modelling and Analysis of Environment Canada (not a group that anyone would confuse with the usual skeptics). An excerpt from the paper’s abstract provides the gist of the analysis:

Projections of 21st century warming may be derived by using regression-based methods to scale a model’s projected warming up or down according to whether it under- or over-predicts the response to anthropogenic forcings over the historical period. Here we apply such a method using near surface air temperature observations over the 1851–2010 period, historical simulations of the response to changing greenhouse gases, aerosols and natural forcings, and simulations of future climate change under the Representative Concentration Pathways from the second generation Canadian Earth System Model (CanESM2).

Or, to put it another way, Gillett et al. used the observed character of global temperature increase—an integrator of all processes acting upon it—to guide an adjustment to the temperature projections produced by a climate model. Sounds familiar!!

And what did they find? From the Abstract of Gillett et al.:

Our analysis also leads to a relatively low and tightly-constrained estimate of Transient Climate Response of 1.3–1.8°C, and relatively low projections of 21st-century warming under the Representative Concentration Pathways.

The Transient Climate Response is the temperature rise at the time of the doubling of the atmospheric CO2 concentration, which will most likely occur sometime in the latter decades of this century. Which means that results of Gillett et al. are in direct accordance with the results of Michaels et al. published 10 years prior and which played a central role in precipitating the wrath of the Climategate scientists upon us, Chris de Freitas and Climate Research.

Both the Gillett et al. (2012) and the Michaels et al. (2002) studies show that climate models are over-predicting the amount of warming that is a result of human changes to the constituents of the atmosphere, and that when they are constrained to conform to actual observations of the earth’s temperature progression, the models project much less future warming (Figure 1).

Figure 1. Dashed lines show the projected course of 21st century global temperature rise as projected by the latest version (CanESM2) of the Canadian coupled ocean‐atmosphere climate model for three different future emission scenarios (RCPs). Colored bars represent the range of model projections when constrained by past 160 years of observations. All uncertainty ranges are 5–95%. (figure adapted from Gillet et al., 2012: note the original figure included additional data not relevant to this discussion).

And a final word of advice to whoever was the editor at GRL that was responsible for overseeing the Gillett et al. publication—watch your back.

References:

Gillett, N.P., et al., 2012. Improved constraints on 21st-century warming derived using 160 years of temperature observations. Geophysical Research Letters, 39, L01704, doi:10.1029/2011GL050226.

Michaels, P.J., et al., 2002. Revised 21st century temperature projections. Climate Research, 23, 1-9.

SOURCE

Shale gas pollution fears dismissed

It is "extremely unlikely" that ground water supplies would be polluted by methane as a result of controversial "fracking" for shale gas, UK geologists have said.

And although the process, which uses high-pressure liquid pumped deep underground to fracture shale rock and release gas, caused two earthquakes in Lancashire last year, the quakes were too small to cause damage, they said.

Campaigners have called for a moratorium on fracking in the UK in the face of the earthquakes and amid fears it could lead to pollution of drinking water by methane gas or chemicals in the liquid used in the process.

Fracking has proved controversial in the US, where shale gas is already being exploited on a large scale and where footage has been captured of people able to set fire to the water coming out of their taps as a result of gas contamination.

But Professor Mike Stephenson, of the British Geological Survey, said most geologists thought it was a "pretty safe activity" and the risks associated with it were low.

He said the distance between groundwater supplies around 40-50 metres below the surface and the deep sources of gas in the shale a mile or two underground, made it unlikely methane would leak into water as a result of fracking.

There was no evidence in peer-reviewed literature of pollution of water by methane as a result of fracking, he said, adding that the presence of the gas in US water supplies was likely to be natural. But a survey was currently being conducted in the UK, to establish a baseline of any gas naturally found in groundwater before drilling took place.

"If you don't know what the baseline is, you don't know if people are running a tight ship. There's natural methane in groundwater and you have to distinguish between what's there already and what might have leaked in."

He said two cases of methane pollution of water in the US, neither of which were due to fracking for shale gas, were the result of mismanagement. The UK has one of the strictest regulatory regimes in the world, he added.

Fracking by energy company Cuadrilla was halted in the Blackpool area last year, after two small quakes in the area which the geologists are certain were caused by fracking. Although they were felt by around 50 people in the area, they were too small to cause any damage.

SOURCE

On IPCCs exaggerated climate sensitivity and the emperor's new clothes

Nir Shaviv

A few days ago I had a very pleasant meeting with Andrew Bolt. He was visiting Israel and we met for an hour in my office. During the discussion, I mentioned that the writers of the recent IPCC reports are not very scientific in their conduct and realized that I should write about it here.

Normal science progresses through the collection of observations (or measurements), the conjecture of hypotheses, the making of predictions, and then through the usage of new observations, the modification of the hypotheses accordingly (either ruling them out, or improving them). In the global warming "science", this is not the case.

What do I mean?

From the first IPCC report until the previous IPCC report, climate predictions for future temperature increase where based on a climate sensitivity of 1.5 to 4.5øC per CO2 doubling. This range, in fact, goes back to the 1979 Charney report published by the National Academy of Sciences. That is, after 33 years of climate research and many billions of dollars of research, the possible range of climate sensitivities is virtually the same! In the last (AR4) IPCC report the range was actually slightly narrowed down to 2 to 4.5øC increase per CO2 doubling (without any good reason if you ask me). In any case, this increase of the lower limit will only aggravate the point I make below, which is as follows.

Because the possible range of sensitivities has been virtually the same, it means that the predictions made in the first IPCC report in 1990 should still be valid. That is, according to the writers of all the IPCC reports, the temperature today should be within the range of predictions made 22 years ago. But they are not!

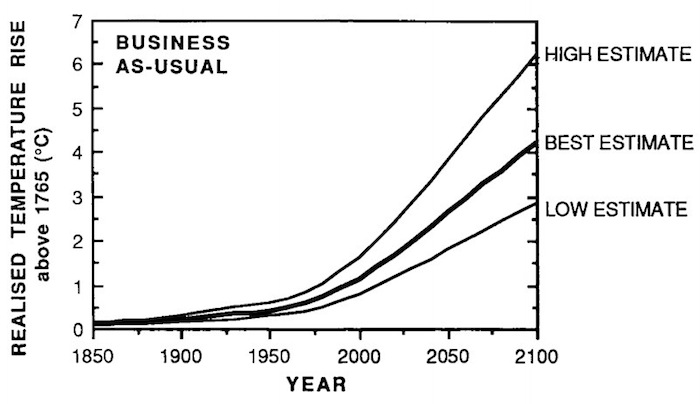

The business as usual predictions made in 1990, in the first IPCC report, are given in the following figure.

The business-as-usual predictions made in the first IPCC report, in 1990. Since the best range for the climate sensitivity (according to the alarmists) has not changed, the global temperature 22 years later should be within the predicted range. From this graph, we take the predicted slopes around the year 2000.

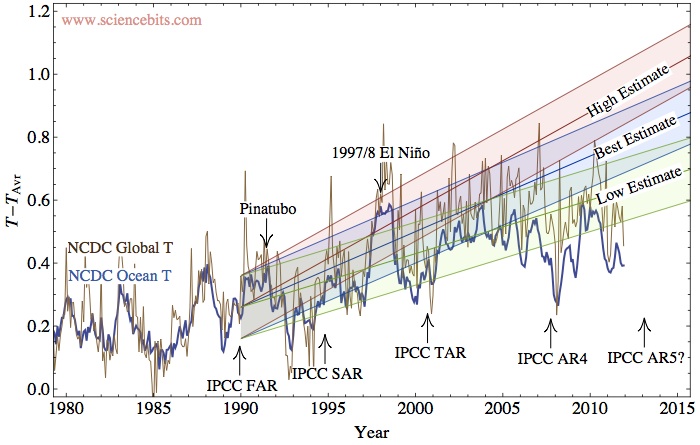

How well do these predictions agree with reality? In the next figure I plot the actual global and oceanic temperatures (as made by the NCDC). One can argue that either the ocean temperature or the global (ocean+land) temperature is better. The Ocean temperature includes smaller fluctuations than the land (and therefore less than the global temperature as well), however, if there is a change in the average global state, it should take longer for the oceans to react. On the other hand, the land temperature (and therefore the global temperature) is likely to include the urban heat island effect.

The NCDC ocean (blue) and global (brown) monthly temperature anomalies (relative to the 1900-2000 average temperatures) since 1980. The observed temperatures compared to the predictions made in the first IPCC report. Note that the width of the predictions is ñ0.1øC, which is roughly the size of the month to month fluctuations in the temperature anomalies.

From the simulations that my student Shlomi Ziskin has carried out for the 20th century, I think that the rise in the ocean temperature should be only about 90% of the global temperature warming since the 1980's, i.e., the global temperature rise should be no more than about 0.02-0.03øC warmer than the oceanic warming (I'll write more about this work soon). As we can see from the graph, the difference is larger, around 0.1øC. It would be no surprise if this difference is due to the urban heat island effect. We know from McKitrick and Michaels' work, that there is a spatial correlation between the land warming and different socio-economic indices (i.e., places which developed more, had a higher temperature increase). This clearly indicates that the land data is tainted by some local anthropogenic effects and should therefore be considered cautiously. In fact, they claim that in order to remove the correlation, the land warming should be around 0.17øC per decade instead of 0.3. This implies that the global warming over 2.2 decades should be 0.085øC cooler, i.e., consistent with the difference!

In any case, irrespective of whether you favor the global data, or the oceanic data, it is clear the the temperature with its fluctuations is inconsistent with the "high estimate" in the IPCC-FAR (and it has been the case for a decade if you take the oceanic temperature, or half a decade, if you take the global temperature, not admitting that it is biased). In fact, it appears that only the low estimate can presently be consistent with the observations. Clearly then, earth's climate sensitivity should be revised down, and the upper range of sensitivities should be discarded and with it, the apocalyptic scenarios which they imply. For some reason, I doubt that the next AR5 report will consider this inconsistency, nor that they will revise down the climate sensitivity (and which is consistent with other empirical indicators of climate sensitivity). I am also curious when will the general public realize that the emperor has no clothes.

Of course, Andrew commented that the alarmists will always claim that there might be something else which has been cooling, and we will pay for our CO2 sevenfold later. The short answer is that "you can fool some of the people some of the time, but you cannot fool all of the people all of the time!" (or as it should be adapted here, "you cannot fool most of the people indefinitely!").

The longer answer is that even climate alarmists realize that there is a problem, but they won't admit it in public. In private, as the climate gate e-mails have revealed, they know it is a problem. In October 2009, Kevin Trenberth wrote his colleagues:

The fact is that we can't account for the lack of warming at the moment and it is a travesty that we can't. The CERES data published in the August BAMS 09 supplement on 2008 shows there should be even more warming: but the data are surely wrong. Our observing system is inadequate.

However, instead of reaching the reasonable conclusion that the theory should be modified, the data are "surely wrong". (This, btw, is a sign of a new religion, since no fact can disprove the basic tenets).

When you think of it, those climatologists are in a rather awkward position. If you exclude the denial option (apparent in the above quote), then the only way to explain the "travesty" is if you have a joker card, something which can provide warming, but which the models don't take into account. It is a catch-22 for the modelers. If they admit that there is a joker, it disarms their claim that since one cannot explain the 20th century warming without the anthropogenic contribution, the warming is necessarily anthropogenic. If they do not admit that there is a joker, they must conclude (as described above) that the climate sensitivity must be low. But if it is low, one cannot explain the 20th century without a joker. A classic Yossarian dilemma.

This joker card is of course the large solar effects on climate.

SOURCE

Warmist makes private admission

Ed Cook: "in certain ways the [Medieval Warm] period may have been more climatically extreme than in modern times"

Climategate Email 5089:

A growing body of evidence clearly shows that hydroclimatic variability during the putative MWP (more appropriately and inclusively called the "Medieval Climate Anomaly" or MCA period) was more regionally extreme (mainly in terms of the frequency and duration of megadroughts) than anything we have seen in the 20th century, except perhaps for the Sahel. So in certain ways the MCA period may have been more climatically extreme than in modern times. The problem is that we have been too fixated on temperature, especially hemispheric and global average temperature, and IPCC is enormously guilty of that. So the fact that evidence for "warming" in tree-ring records during the putative MWP is not as strong and spatially homogeneous as one would like might simply be due to the fact that it was bloody dry too in certain regions, with more spatial variability imposed on growth due to regional drought variability even if it were truly as warm as today. The Calvin cycle and evapotranspiration demand surely prevail here: warm-dry means less tree growth and a reduced expression of what the true warmth was during the MWP.

SOURCE

No spark in electric car sales for a decade, execs say

Auto execs think it will be a decade before electric cars reach 15 percent of annual global sales

Despite continued heavy investment by auto makers in electric propulsion technologies, global automotive executives don't expect e-car sales to exceed 15 percent of annual global auto sales before 2025, according to the 13th annual global automotive survey conducted by KPMG LLP, the U.S. audit, tax, and advisory firm.

In polling 200 C-level executives in the global automotive industry for the 2012 automotive survey, KPMG found that nearly two-thirds (65 percent) of executives don't expect electrified vehicles (meaning all e-vehicles, from full hybrids to FCEVs) to exceed 15 percent of global annual auto sales before 2025.

Executives in the U.S. and Western Europe expect even less adoption, projecting e-vehicles will only account for 6-10 percent of global annual auto sales.

"Electric vehicles are still in their infancy, and while we've seen some recent model introductions, consumer demand has so far been modest," said Gary Silberg, National Automotive Industry leader for KPMG LLP. "While we can expect no more than modest demand in the foreseeable future, we can also expect OEMs to intensify investment, fully appreciating what is at stake in a very competitive industry."

Automakers Inject Investments into Range of Electric Technologies

Despite the relatively modest sales projections for electric vehicles over the next 15 years, automotive executives in the KPMG survey indicate that a wide range of electric technologies will be an increased focus of their investment matrix. In fact, over the next two years:

83 percent say automakers will increase investment in e-motor production,

81 percent say investment in battery (pack/cell) technology will rise,

76 percent expect increased investment in power electronics for e-cars, and,

65 percent predict increased investment in fuel cell (hydrogen) technology.

Additionally, executives expect that hybrid fuel systems, battery electric power and fuel cell electric power will be the alternative propulsion technologies to attract the most auto industry investment over the next five years.

Placing Bets `Across the Board

"What's interesting is that automakers are placing bets across the board, and large bets at that, because no one knows which technology will ultimately win the day with consumers," added Silberg.

"In last year's KPMG survey, execs told us it would be more than five years before the industry is able to offer an electric vehicle that is as affordable as traditional fuel vehicles for mainstream buyers. It will be interesting to see how consumer adoption progresses as automakers discover ways to offer these electrified cars at better price points and the infrastructure for these vehicles becomes more robust and accommodating," he said.

However, despite all the investment and energy being focused on electric platforms, nearly two-thirds (61 percent) of executives say the optimization (so-called downsizing) of internal combustion engines (ICE) still offers greater efficiency and CO2 reduction potential than any electric vehicle technology based on the current energy mix.

More HERE

***************************************

For more postings from me, see DISSECTING LEFTISM, TONGUE-TIED, EDUCATION WATCH INTERNATIONAL, POLITICAL CORRECTNESS WATCH, FOOD & HEALTH SKEPTIC, GUN WATCH, AUSTRALIAN POLITICS, IMMIGRATION WATCH INTERNATIONAL and EYE ON BRITAIN. My Home Pages are here or here or here. Email me (John Ray) here. For readers in China or for times when blogger.com is playing up, there are mirrors of this site here and here

*****************************************

Jim Hansen and his twin

Jim Hansen and his twin

No comments:

Post a Comment